EDA for AWS operations

Welcome

Great that you’re here. Today, I will write a bit about Event-Driven Ansible and its possibilities for improving the operational efficiency of our AWS environment. But wait, what does it mean by Event-Driven Ansible? My favorite sentence from the documentation is “EDA provides a way of codifying operational logic,” so in short words, we can code logic for responding to different events. For example, we can trigger playbook execution with Lambdas make our self-service process a bit more ops-oriented, or introduce ChatOps.

Before we begin

Native AWS services?

Seems that could be a question here. Why not use System Manager, as it is a dedicated service for operations? Or OpsWorks? Or even Lambdas. Let’s break it down now.

System Manager

As AWS has many complementary solutions with quite similar functionality, we will start with the most well-known, and easy to use. System Manager is agent agent-based solution for managing a whole fleet of instances, no matter whether cloud or on-prem, if an agent is installed System Manager can be used. Additionally, with the usage of Automation, we can use more than 370 pre-defined tasks, written with PowerShell, Bash, or Python, and that is great. If we wanted to schedule servers update, we can do it, and execute this operation on the whole fleet at the same time(which is not the best idea), or on part based on tags and groups. So what’s the challenge? System Manager isn’t declarative, If we would like to add a custom ssm-document for creating a new folder, we need to write for example:

---

schemaVersion: '2.2'

description: State Manager mkdir example

parameters: {}

mainSteps:

- action: aws:runShellScript

name: make /opt/test

inputs:

runCommand:

- sudo mkdir /opt/test && chmod -R 0440 && chown admin. /opt/test

It’s fine, but it’s like taking a step back. For service restart, it’s a great solution, but refreshing our system or using complex functions becomes even more complex.

OpsWorks

The solution was designed to serve managed Chef/Puppet to customers, unfortunately, all of them will reach End of Life withe the end of May 2024. During the time of writing this article (1-half of March), you can read about it on the public service page. OpsWorks. So as a summary, nice solution unfortunately based on Chef/Puppet, not a SaltStack, also the idea of stacks could be a blocker for a multi-cloud environment.

Lambdas

Lambdas and the whole serverless ecosystem were called a “new big thing”, a lot of people advocate for using function as much as possible and everywhere. After a while, we realized, that Lambdas-based architecture is very hard to keep clean and simple, also can easily make our bill much higher. That is why we started using them when it makes sense. For example image processing, data processing, etc, but most importantly, functions can act as a glue, for everything, connecting services that aren’t natively integrated, executing tasks, or even stopping our instance, when some criteria weren’t met. The thing is that it will be quite hard and not very time-efficient to use Lambdas for service restart tasks. The main reason is that functions operate on different logic layers, let’s say hypervisor level. However, server-level operation could be a challenge.

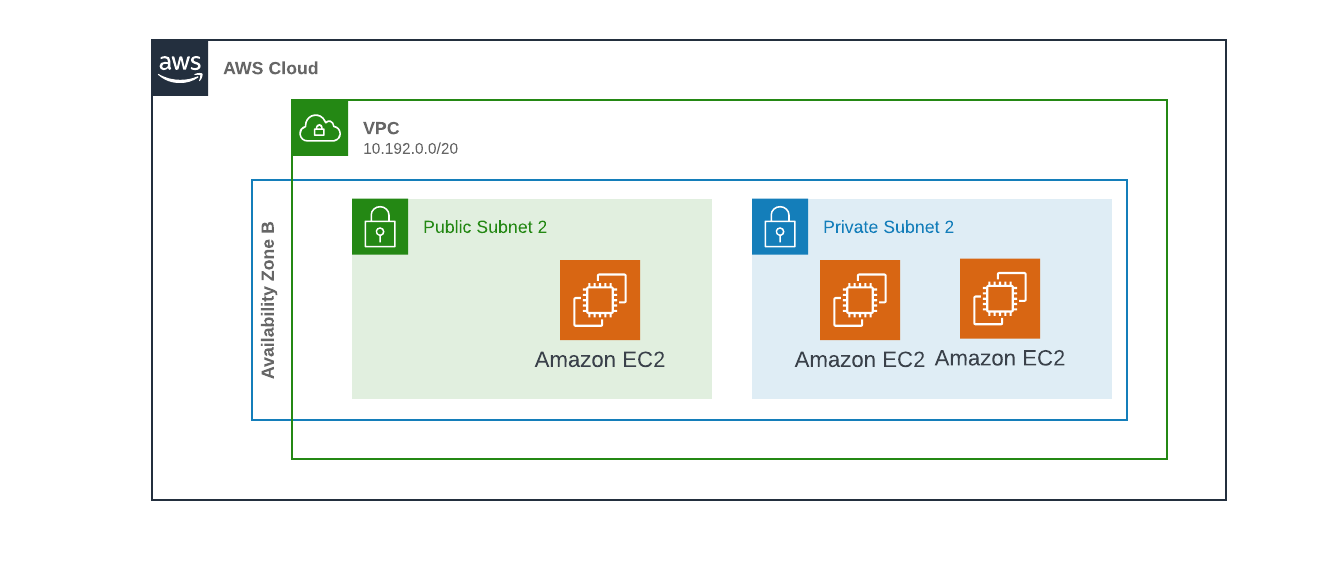

Initial infrastructure

First, we need to set up our base infrastructure and install the needed packages. Let’s assume, that our infrastructure will be very simple. We will use just for simplification three small VMs, all of which will be placed in a private subnet, one will be acting as a bastion host, for communication with our ansible-runner, just to keep the whole setup a bit more “real world” and secure.

|

|---|

As it’s quite a straightforward task I will use AWS CDK for that, please take a look at the user-data part, which is a place where we’re installing EDA components:

userData.addCommands(

`dnf --assumeyes install git maven gcc java-17-openjdk python3-pip python3-devel`,

`echo 'export JAVA_HOME=/usr/lib/jvm/jre-17-openjdk' >> /etc/profile`,

`pip3 install wheel ansible ansible-rulebook ansible-runner`,

`echo 'export PATH=$PATH:/usr/local/bin' >> /etc/profile`,

`mkdir /opt/ansible`,

`git clone https://github.com/3sky/eda-example-ansible /opt/ansible/eda-example-ansible`,

`cd /opt/ansible/eda-example-ansible && /usr/local/bin/ansible-galaxy collection install -r collections/requirements.yml -p collections`,

`chown -R ec2-user:ec2-user /opt/ansible/*`,

);

When the stack is ready we can connect with a regular .ssh/config file.

Host jump

PreferredAuthentications publickey

IdentitiesOnly=yes

IdentityFile ~/.ssh/id_ed25519_eda

User ec2-user

# this one could change

# as its public IP

Hostname 3.126.251.7

Host runner

PreferredAuthentications publickey

IdentitiesOnly=yes

ProxyJump jump

IdentityFile ~/.ssh/id_ed25519_eda

User ec2-user

Hostname 10.192.0.99

Host compute

PreferredAuthentications publickey

IdentitiesOnly=yes

ProxyJump jump

IdentityFile ~/.ssh/id_ed25519_eda

User ec2-user

Hostname 10.192.0.86

With that being done, we can focus on popular use cases.

EDA configuration

As today’s article is an EDA introduction, we will focus on basic http listener and basic service configuration, next article will be focused on more complex event sources like MSK or watchdogs.

Server configuration

First let’s use a basic server setup, based on documentation:

---

- name: Listen for events on a webhook

hosts: all

## Define our source for events

sources:

# use Python range function

- ansible.eda.range:

limit: 5

# use webhook with path /endpoint

- ansible.eda.webhook:

host: 0.0.0.0

port: 5000

# use kafka

- ansible.eda.kafka:

topic: eda

host: localhost

port: 9092

group_id: testing

Seems rather straight, right? First, we’re using the keyword sources, then with typical Ansible syntax, we’re providing the FQDN of the module. Also as you can see there are multiple event sources, all of which are described here. In the case of introduction, I will be using web-hook endpoint.

Rules configuration

Now let’s define our first rules:

---

- name: Listen for events on a webhook

hosts: all

sources:

# use webhook with path /endpoint

- ansible.eda.webhook:

host: 0.0.0.0

port: 5000

# define the conditions we are looking for

rules:

- name: Rule 1

condition: event.payload.message == "Hello"

# define the action after meeting the condition

action:

run_playbook:

name: what.yml

# As it's a list of dicts, we can add another rule

- name: Rule 2

condition: event.payload.message == "Ahoj"

action:

run_playbook:

name: co.yml

Execution phase

Great! Let’s assume, that both of our run_playbook looks very similar, and are simple:

---

- hosts: localhost

connection: local

tasks:

- debug:

msg: "World"

Now, we’re good to go, and execute our ansible-rulebook command:

ansible-rulebook --rulebook eda-example.yml -i inventory.yml --verbose

Then let’s open another terminal window(or login into bastion host) and execute the testing http request:

curl -H 'Content-Type: application/json' -d "{\"message\": \"Test\"}" 10.192.0.92:5000/endpoint

Inside the log we can see:

2024-03-20 20:42:26,859 - aiohttp.access - INFO - 10.192.0.92 [20/Mar/2024:20:42:26 +0000] "POST /endpoint HTTP/1.1" 200 158 "-" "curl/7.76.1"

Then, let’s try met out conditional, by changing our message to “Hello”, and next to “Ahoj”.

2024-03-20 20:44:39,199 - aiohttp.access - INFO - 10.192.0.92 [20/Mar/2024:20:44:39 +0000] "POST /endpoint HTTP/1.1" 200 158 "-" "curl/7.76.1"

PLAY [localhost] ***************************************************************

TASK [Gathering Facts] *********************************************************

ok: [localhost]

TASK [debug] *******************************************************************

ok: [localhost] => {

"msg": "World"

}

PLAY RECAP *********************************************************************

localhost : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

2024-03-20 20:44:41,923 - ansible_rulebook.action.runner - INFO - Ansible runner Queue task cancelled

2024-03-20 20:44:41,924 - ansible_rulebook.action.run_playbook - INFO - Ansible runner rc: 0, status: successful

2024-03-20 20:46:14,240 - aiohttp.access - INFO - 10.192.0.92 [20/Mar/2024:20:46:14 +0000] "POST /endpoint HTTP/1.1" 200 158 "-" "curl/7.76.1"

PLAY [localhost] ***************************************************************

TASK [Gathering Facts] *********************************************************

ok: [localhost]

TASK [debug] *******************************************************************

ok: [localhost] => {

"msg": "Svet"

}

PLAY RECAP *********************************************************************

localhost : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

2024-03-20 20:46:16,620 - ansible_rulebook.action.runner - INFO - Ansible runner Queue task cancelled

2024-03-20 20:46:16,621 - ansible_rulebook.action.run_playbook - INFO - Ansible runner rc: 0, status: successful

Now we have a working example, of working EDA. Let’s focus on something more useful.

Example use cases

As you probably notice already it’s more like REST API to Ansible, and you’re right. It could do that as well, as web-hook is an event as well. So let’s focus on basic administration tasks.

---

- name: Listen for events on a webhook

hosts: all

sources:

- ansible.eda.webhook:

host: 0.0.0.0

port: 5000

- name: Restart Server

condition: event.payload.task == "restart"

action:

run_playbook:

name: reboot.yml

- name: Reload Nginx

condition: event.payload.task == "reload-nginx"

action:

run_playbook:

name: reload-nginx.yml

With the following rulebook, we’re able to restart the server if needed or reload nginx with the usage of REST API. That can be easily connected with ITSM systems like Jira or ServiceNow. However please note that there is no filter or tags implemented - based on documentation, which means, we need a dedicated playbook for a particular group of hosts, or issues.

Summary

EDA seems to be a more and more popular topic among many organizations, which is why in my opinion is a good time to take a look at it. To make it even simpler to start with I prepared a whole ready-to-use project that contains:

- playground based on AWS

- all the playbooks and rules included in this article

- step-by-step README file

That is why please use this article just like an introduction, the whole magic should take place on your AWS account. Please play with rules and use-cases, the thing about possibilities which this pattern can deliver in your organization, or which aspect can improve. If you have any questions or need help don’t hesitate and contact me.