Meetings on demand

Welcome

Yesterday I decided to solve another, probably non-existent problem. So for quite a long time, I was looking for a solution that would allow me to schedule meetings with friends, family, and clients (the custom domain looks very professional). Most of them are paid every month. Which in my case is not a very cost-effective solution, if I have one meeting per month, sometimes two. Also let’s assume, that being an AI learning material for Google, or Zoom isn’t my dream position.

Suddenly, I realized that I could use Jitsi, and then self-host it. The problem was that I was looking for an on-demand solution. So I took the official docker image and rewritten it into ECS, which should just work.

Also, I’d like to apologize to everyone, who is waiting for part two of setting up Keycloak, or EDA use cases. Someday I will deliver it, just more time is needed. Additionally, during the last month, I received a lot of emails about challenges related to setting up the best open-source SSO solution on ECS. That means, that my article was needed, and at least few people read it. That’s cool!

Jitsi

Ok, so let’s start with Jitsi. What is it?

Jitsi Meet is a set of Open Source projects which empower users to use and deploy video conferencing platforms with state-of-the-art video quality and features.

As you see it’s open-source software on Apache-2.0 license.

Services list

As you can see, the post structure is very similar to latest one. However today instead of a database we need CloudMap.

- ECS(Fargate)

- AWS Application Load Balancer

- AWS CloudMap

- Route53 for domain management

Ok, good. Finally, we will deploy something different right?

Not really, but the use case is super useful!

Implementation

As always it could be simpler, and more elegant, but it does the job. So as a main point, we have tree files.

❯ tree src

src

├── base.ts

├── jitsi.ts

└── main.ts

Based on my splitting approach, I have a dedicated stack for

more static resources(and cheap), so we can keep them forever(let’s say).

Then we have a file dedicated to our Jitsi ECS stack, at the end

you can see the control file aka src/main.ts with needed variables like account ID,

Region, container version, and your domain name. The content of mentioned file is:

import { App, Tags } from 'aws-cdk-lib';

import { BaseStack } from './base';

import { JitsiStack } from './jitsi';

const devEnv = {

account: '471112990549',

region: 'eu-central-1',

};

const DOMAIN_NAME: string = '3sky.in';

const JITSI_IMAGE_VERSION: string = 'stable-9584-1';

const app = new App();

const base = new BaseStack(app, 'jitsi-baseline', {

env: devEnv,

stackName: 'jitsi-baseline',

domainName: DOMAIN_NAME,

});

new JitsiStack(app, 'jitsi-instance',

{

env: devEnv,

stackName: 'jitsi-instnace',

sg: base.privateSecurityGroup,

listener: base.listener,

ecsCluster: base.ecsCluster,

namespace: base.namespace,

domainName: DOMAIN_NAME,

// images version

jitsiImageVersion: JITSI_IMAGE_VERSION,

},

);

Tags.of(app).add('description', 'Jitsi Temporary Instance');

app.synth();

Networking

This is up to you, however, I decided to use a separate dedicated VPC with

two AZs - ALB requirement. Additionally, I’m using two types of subnets:

PUBLIC, and PRIVATE_WITH_EGRESS.

Then we have, ALB, Route53 assignment, security groups, ECS Cluster, and

a new interesting object namespace. What do I need this for? Let’s dive into

docker-compose for a while.

That’s my docker-compose for Actual app, which IMO is a great app for personal budgeting if you’re living in the EU.

name: actual

services:

actual_server:

container_name: actual

image: docker.io/actualbudget/actual-server:24.7.0

expose:

- 5006

volumes:

- data:/data

networks:

- proxy

restart: unless-stopped

networks:

proxy:

name: proxy

external: true

volumes:

data:

external: true

name: actual_data

As you can see we have a construct called networks, in the above case

it is the default bridge type of network with the name proxy and annotation

external which means that a resource is controlled outside the stack.

Mostly is used to connect services between or inside the stack.

And we have the same in Jitsi’s original docker-compose file.

[...]

networks:

meet.jitsi:

# Custom network so all services can communicate using a FQDN

networks:

meet.jitsi:

Now let’s back to the AWS-native landscape. ECS does not support networking

in the same way, as docker. To solve that, issue we need to make a private DNS entry

pointed to the prosody container and resolved it by the xmpp.meet.jitsi value.

Sounds complex, right? Fortunately, there is a service called

AWS Cloud Map.

AWS Cloud Map is a cloud resource discovery service. With Cloud Map, you can define custom names for your application resources, and it maintains the updated location of these dynamically changing resources.

How to use it? It’s simpler, than you could think!

- First define a namespace

const namespace = new servicediscovery.PrivateDnsNamespace(this, 'Namespace', {

name: 'meet.jitsi',

vpc

});

- Define the cloud option inside your

FargateService.

const ecsService = new ecs.FargateService(this, 'EcsService', {

cluster: cluster,

taskDefinition: ecsTaskDefinition,

vpcSubnets: {

subnetType: ec2.SubnetType.PRIVATE_WITH_EGRESS

},

securityGroups: [securityGroup],

cloudMapOptions: {

name: 'xmpp',

container: prosody,

cloudMapNamespace: namespace,

dnsRecordType: servicediscovery.DnsRecordType.A

}

});

Then all your containers, and other services in VPC will be able to call

prosody container by simple xmpp.meet.jitsi FQDN! Which is not always a

good thing, which seems to be a good reason for using dedicated VPC.

Containers

As we have structure, and networking, we need to add containers. In our case it is

very simple. To our FargateTaskDefinition,

const ecsTaskDefinition = new ecs.FargateTaskDefinition(this, 'TaskDefinition', {

memoryLimitMiB: 8192,

cpu: 4096,

runtimePlatform: {

operatingSystemFamily: ecs.OperatingSystemFamily.LINUX,

cpuArchitecture: ecs.CpuArchitecture.X86_64

}

});

we are simply adding four containers web, jicofo, jvb, prosody

just like shown below:

ecsTaskDefinition.addContainer('web', {

image: ecs.ContainerImage.fromRegistry('quay.io/3sky/jitsi-web:' + jitsiImageVersion),

environment: {

TZ: 'Europe/Warsaw',

PUBLIC_URL: 'https://meet.' + theDomainName

},

portMappings: [

{

containerPort: 80,

protocol: ecs.Protocol.TCP

}

],

logging: new ecs.AwsLogDriver(

{

streamPrefix: this.stackName + '-web'

}

)

});

Add-ons

If you decided to go through my source code, you could spot two additional points.

- Secrets are simplified

That was done on purpose. Even in public repo, you will see:

let JICOFO_AUTH_PASSWORD: string = '2dAZl8Jkeg5cKT/rbJDZcslGCWt1cA3NnF4QqkFFATY=';

let JVB_AUTH_PASSWORD: string = 'Tph1fJEdU6lFY8aTNPz4EpI5iewQXl+Ot17IeGCmvBs=';

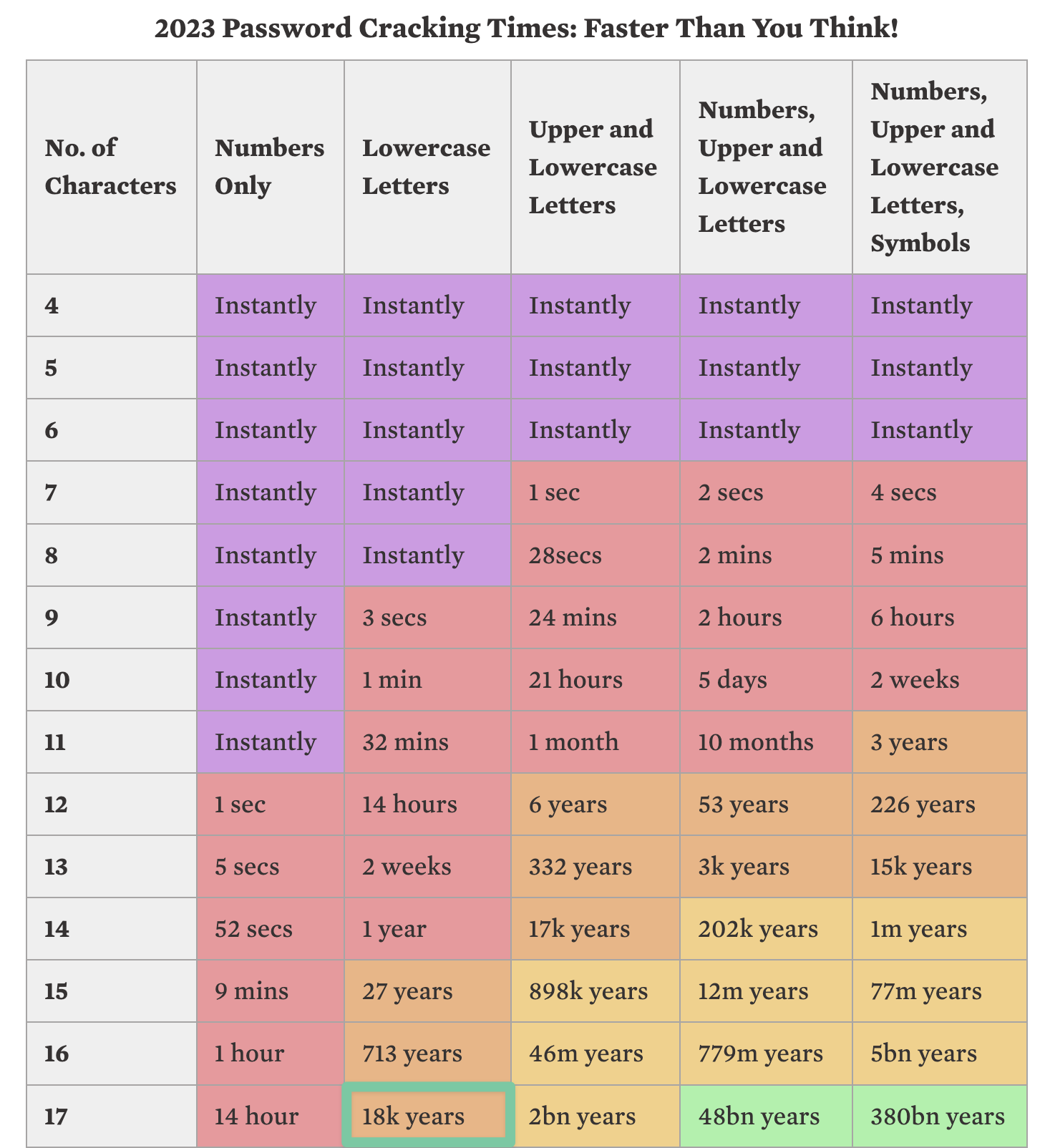

The reason is the ephemeral nature of service and build time. AWS SecretManager takes more time to generate and after that to destroy. Here, for my production environment I can just change those strings a bit, and be safe enough during my 1h call, or even a bit longer according to this link.

|

|---|

- Images are using

quay.io

image: ecs.ContainerImage.fromRegistry('quay.io/3sky/jitsi-web:' + jitsiImageVersion),

Due to testing and spanning multiple times containers,

it was very easy to be bothered with the Docker Hub rate limit.

As a lazy person, I just created a bash script to migrate everything

To free, no limited quay.io.

#!/bin/bash

# Define the container version as a variable

CONTAINER_VERSION=$1

# Define an array of images

IMAGES=("web" "prosody" "jvb" "jicofo")

# Check if skopeo is installed

if ! command -v skopeo &> /dev/null

then

echo "skopeo could not be found, please install it first."

exit 1

fi

# Function to copy image from Docker Hub to Quay

copy_image() {

local IMAGE_NAME=$1

local DOCKERHUB_IMAGE="docker.io/jitsi/$IMAGE_NAME:$CONTAINER_VERSION"

local QUAY_IMAGE="quay.io/3sky/jitsi-$IMAGE_NAME:$CONTAINER_VERSION"

# Copy the image using skopeo

skopeo copy docker://$DOCKERHUB_IMAGE docker://$QUAY_IMAGE

# Check if the skopeo command was successful

if [ $? -eq 0 ]; then

echo "Image $IMAGE_NAME:$CONTAINER_VERSION successfully copied from Docker Hub to Quay."

else

echo "Failed to copy image $IMAGE_NAME:$CONTAINER_VERSION from Docker Hub to Quay."

fi

}

# Iterate over the images and copy each one

for IMAGE in "${IMAGES[@]}"

do

copy_image $IMAGE

done

Summary

|

|---|

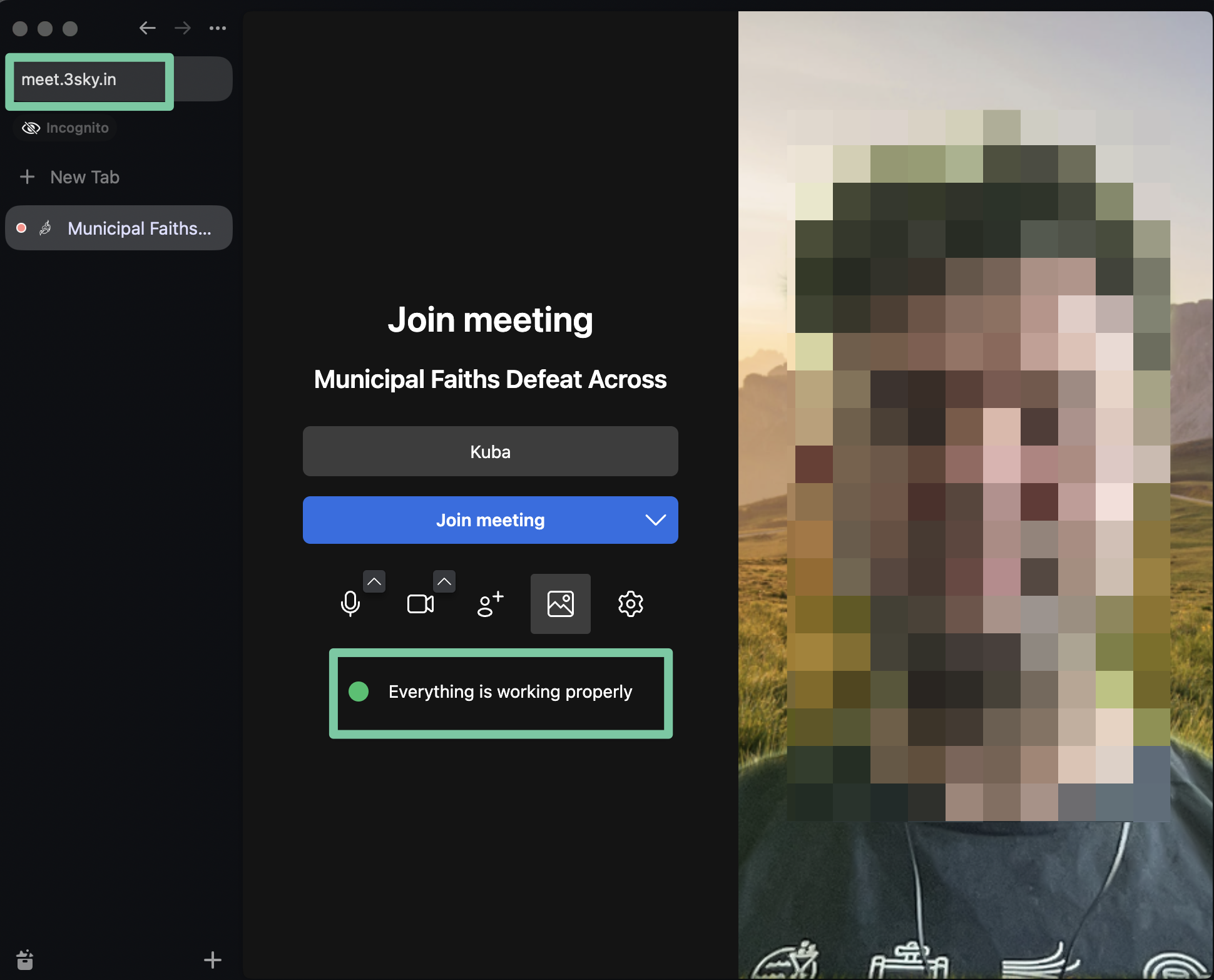

As you can see, I’m hosting my meeting platform with the usage of open-source software, AWS, and a bit of code. The solution is rather cheap, not tested against very big meetings, but stable enough to talk with the wife or friend during summertime. There is no risk of being recorded, or dependent of Google account ownership. With that in mind, I’m going to finish overdue articles… or maybe self-host Ollama at home and replace ChatGPT. Who knows?

Ah and to be fully open, the source code could be found on GitHub.