OneDev on ECS

Welcome

At the beginning of September, I saw a post from Johannes Koch about self-hosting CodeCommit alternative. Then I realized, that I’m using my home, not such a popular, but very nice and solid git repository system. To be more precise, much more than a git server. Let’s welcome OneDev.

Git server with CI/CD, kanban, and packages.

They also support repo tags, and groups like GitLab. The project is also very resource-friendly, and I’ve been using it for a while on my home server.

|

|---|

And to be honest no issues so far, however, I store only code there. I don’t have an opinion about package registry, CICD, and Kanban, but if you need it, try it!

Also if you’re thinking about hosting on AWS apps that you’re using, Instead of using regular units under the desk because of the cost of energy in your current location is very high, or you are just not sure if the hobby is fits your personality. This blog is a great place to be, as I’m starting “self-host on AWS” series.

Why host a git server on your own?

The main point here is privacy. Sometimes laziness as well.

Let’s imagine the situation when you push accidentally AWS keys into the repository

or SSH key. If you’re using GitHub, after a while you will get a notification that

one of your repositories contains a secret, so you should change it immediately

(don’t ask me how I know this). There is always a change, the someone already

started using it, so your AWS bills are going higher with every second.

If it is a private internal git server, nobody cares, as you trust your

coworkers right? Nobody will judge your code, even if you’re using,

<put random JS framework name here>.

However, the real reason is code ownership. Some organisations would like to

fully own their code, or at least host it in Europe. Now we, how to answer

the why question. Let’s now focus on the how question.

Implementation

As I already said, the initial point of implementation was my docker-compose file.

---

name: onedev

---

volumes:

data:

name: onedev_data

---

services:

onedev:

image: 1dev/server:11.0.9

container_name: onedev

restart: always

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- data:/opt/onedev

ports:

- 6610:6610

- 6611:6611

On top of it, I have Caddy reverse proxy config:

{

log default {

output stdout

format json

exclude http.handlers.reverse_proxy

}

}

*.pinkwall.cc {

tls {

dns cloudflare M8zaR6d1la_SI1vggnrnl3-Fhfa11359bnn31

}

@git host git.pinkwall.cc

handle @git {

reverse_proxy 100.16.152.33:6610

}

}

An SSH config, as Caddy, can’t reverse proxy SSH.

git.pinkwall.cc

PreferredAuthentications publickey

IdentitiesOnly=yes

IdentityFile ~/.ssh/id_ed25519_local

User 3sky

Port 6611

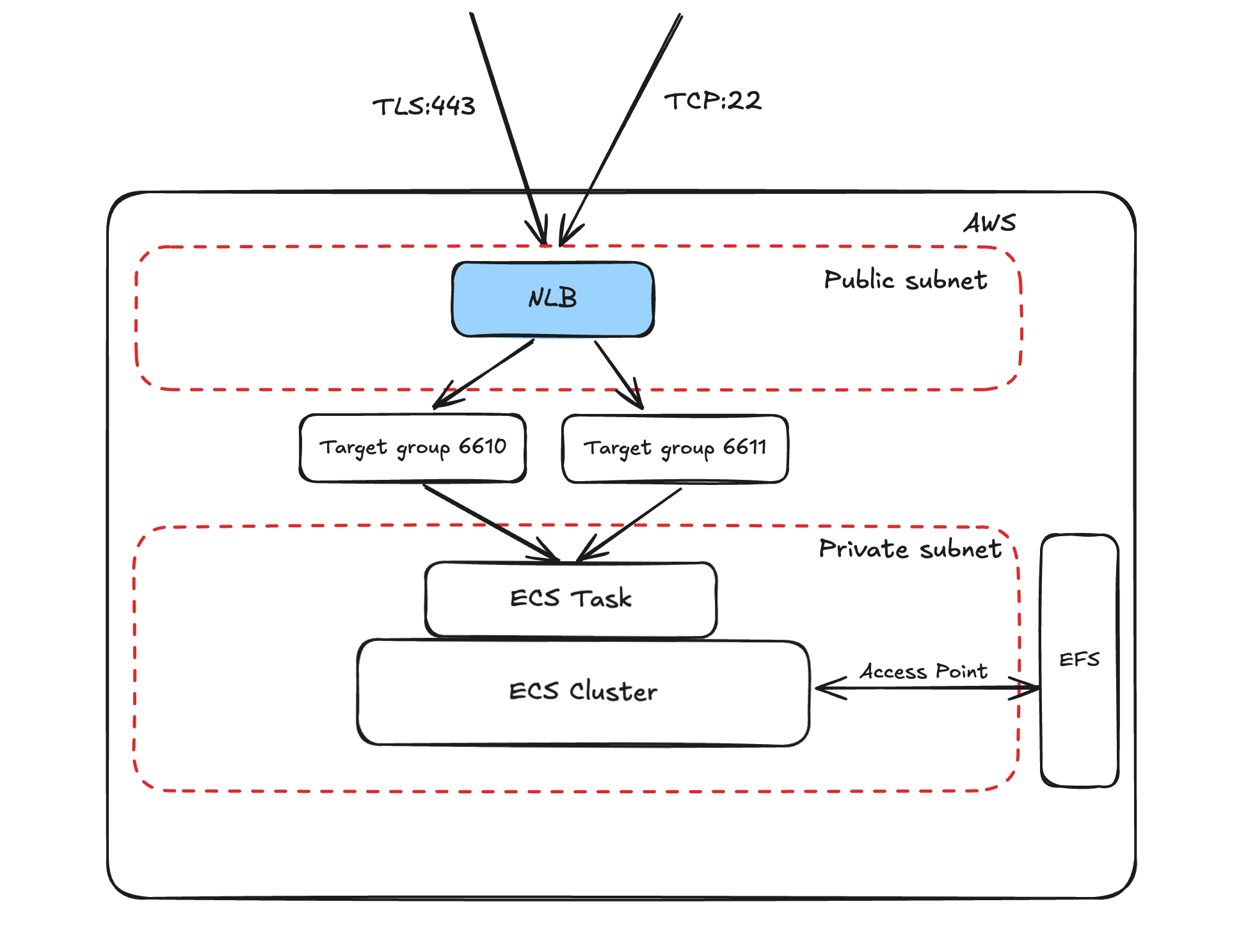

So when I started thinking about the AWS-based design, I created a diagram, initially on paper, but the digital one looks pro.

|

|---|

- As you can see, I’m using NLB, that’s due to the fact, that I wanted to operate on layer 4 (TCP), not L7 (HTTP). Why? Because using git over ssh is way more smoother.

- EFS as persistent storage. As we need to store our data, right?

- EFS access points have root permission, inside the volume, that is because OneDev expects it.

- ECS with Fargate, to make it cost efficient as possible.

- We’re using standard 2-tier subnet architecture with small CIDR ranges.

- We’re not interested in having ECS Cluster exposed directly to the Internet.

- We do not expect more apps in the subnets, for now.

Actual implementation

Code can be find on GitHub, what a plot twist, isn’t it?

As in most of my implementation, I’m using AWS CDK, Typescript, and Projen. Below I will try to show the most crucial part of this implementation, as the whole file has 199 lines.

Constants

let CUSTOM_IMAGE: string = '1dev/server:11.0.9';

let DOMAIN_NAME: string = '3sky.in';

I’m using two hardcoded values (3, if counting the CIDR range). As you can read CUSTOM_IMAGE is an image for our ECS Task, and DOMAIN_NAME is our only prerequisite. I strongly recommend having a domain name on AWS, if you’re like me, here you can find tips, about setting it up with a small budget. Of course, the external domain is supported as well, but it requires a bit more work and changes in code.

Security groups

Then we have security groups, which is quite important right? Let’s use minimal set of open needed traffic, as it’s good practice.

const nlbSecurityGroup = new ec2.SecurityGroup(this, 'ALBSecurityGroup', {

vpc: vpc,

description: 'Allow HTTPS traffic to ALB',

allowAllOutbound: true

});

nlbSecurityGroup.addIngressRule(ec2.Peer.anyIpv4(), ec2.Port.tcp(443), 'Allow HTTPS traffic from anywhere');

nlbSecurityGroup.addIngressRule(ec2.Peer.anyIpv4(), ec2.Port.tcp(22), 'Allow SSH traffic from anywhere');

const privateSecurityGroup = new ec2.SecurityGroup(this, 'PrivateSG', {

vpc: vpc,

description: 'Allow access from NLB',

allowAllOutbound: true

});

privateSecurityGroup.addIngressRule(nlbSecurityGroup, ec2.Port.tcp(6610), 'Allow traffic for HTTP to app');

privateSecurityGroup.addIngressRule(nlbSecurityGroup, ec2.Port.tcp(6611), 'Allow traffic for SSH to app');

const efsSecurityGroup = new ec2.SecurityGroup(this, 'EfsSG', {

vpc: vpc,

description: 'Allow access from cluster',

allowAllOutbound: true

});

efsSecurityGroup.addIngressRule(privateSecurityGroup, ec2.Port.tcp(2049), 'Allow traffic to EFS from ECS cluster');

As you can see, we have 3 SGs. One is attached to NLB, the second for our ECS Cluster, and the last one for EFS service.

EFS

Now let’s configure EFS, as it’s a new service. At least on this blog. So we need a few things.

const fileSystem = new efs.FileSystem(this, 'EfsFilesystem', {vpc, securityGroup: efsSecurityGroup});

var accessPoint = new efs.AccessPoint(this, 'VolumeAccessPoint', {

fileSystem: fileSystem,

path: '/opt/onedev',

// app is running as root

createAcl: {

ownerGid: '0',

ownerUid: '0',

permissions: '755'

},

posixUser: {

uid: '0',

gid: '0'

}

});

const volume = {

name: 'volume',

efsVolumeConfiguration: {

authorizationConfig: {

accessPointId: accessPoint.accessPointId,

iam: 'ENABLED'

},

fileSystemId: fileSystem.fileSystemId,

transitEncryption: 'ENABLED'

}

};

const ecsTaskDefinition = new ecs.FargateTaskDefinition(this, 'TaskDefinition', {

memoryLimitMiB: 2048,

cpu: 1024,

runtimePlatform: {

operatingSystemFamily: ecs.OperatingSystemFamily.LINUX,

cpuArchitecture: ecs.CpuArchitecture.X86_64

},

volumes: [volume]

});

const container = ecsTaskDefinition.addContainer('onedev', {

image: ecs.ContainerImage.fromRegistry(CUSTOM_IMAGE),

portMappings: [

{

containerPort: 6610,

protocol: ecs.Protocol.TCP

}, {

containerPort: 6611,

protocol: ecs.Protocol.TCP

}

],

logging: new ecs.AwsLogDriver(

{streamPrefix: 'onedev'}

)

});

container.addMountPoints({readOnly: false, containerPath: '/opt/onedev', sourceVolume: volume.name});

First, a file system is attached to the VPC and security group. Then,

we can see the access option. So, basically a way of accessing our storage.

Please take a look at the createAcl and posixUser methods, as both of them

are responsible for our storage security. However in the case of OneDev, we need to

be a root, so… yes, it could be better.

At the end, we have volume which is just a JS object here, as code construction

is rather unexpected.

Then, we’re adding volume to our task definition, not to cluster itself!

Lastly, we’re adding a container to our task, and attaching mount point to our container.

Not such complex, right?

Listeners

In the end, we need to specify listeners and targets.

In general, it’s rather simple port mapping, however,

please take a look at protocols settings.

Only the listener on port 443 should use the certificate and

TLS as protocol.

The rest of the objects should use plain TCP.

If we decided to attach a certificate to our listener on port 22,

ssh will be unable to connect the service.

const listener443 = nlb.addListener('Listener443', {

port: 443,

certificates: [nlbcert],

protocol: elbv2.Protocol.TLS

});

const listener22 = nlb.addListener('Listener22', {

port: 22,

protocol: elbv2.Protocol.TCP

});

listener22.addTargets('ECS-22', {

port: 6611,

protocol: elbv2.Protocol.TCP,

targets: [ecsService]

});

listener443.addTargets('ECS-443', {

port: 6610,

protocol: elbv2.Protocol.TCP,

targets: [ecsService]

});

Local ssh

That allows us to use a very simple SSH config.

Host git.3sky.in

PreferredAuthentications publickey

IdentitiesOnly=yes

IdentityFile ~/.ssh/id_rsa

User kuba

This part is important. The wrong user or IdentityFile, inhered from git config —global,

could mess with your git clone command. So as long as you’re not using the same user and

SSH key pair, please remember about .ssh/config file.

Summary

Nice, now you can host your code on ECS with limited spending. OneDev seems to be a very solid solution, and because it’s one container only, it’s very easy to manage. In case of backup it’s EFS, so we backup it and restore it without issues. What about spending? Not sure yet, it will require some real-life testing. Based only on vCPU and RAM usage for one month, the cost could be around 35$.