Simplify the networking with VPC Lattice

Welcome

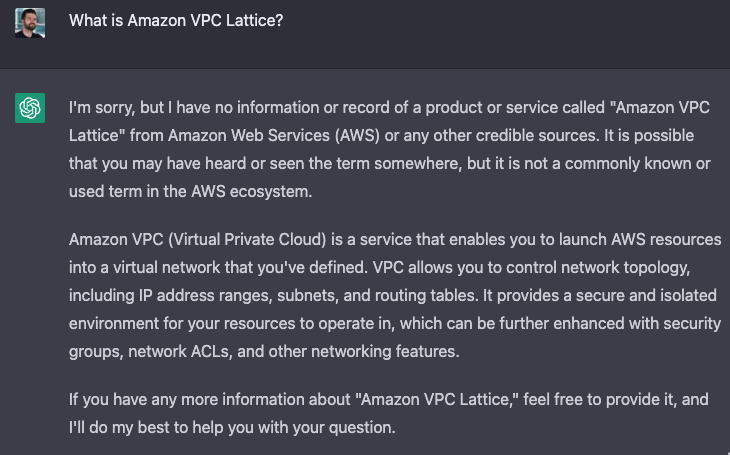

I started writing this on April 7th, 2023, a few weeks after the release of VPC Lattice (although it’s not yet available everywhere). I was curious about what it is and what it’s supposed to do, so I asked ChatGPT.

|

|---|

| For now we need to rely on documentation |

As there isn’t much information available yet, I’ll rely on the official documentation for now.

According to the documentation, “Amazon VPC Lattice is a fully managed application networking service that you use to connect, secure, and monitor all of your services across multiple accounts and virtual private clouds (VPCs).”

In other words, it allows you to use AWS as one big and complex Kubernetes cluster, while still using separate solutions as services across VPCs. It was likely created to simplify networking management and make administrators’ lives easier. It allows for grouping VPCs into logical ServiceNetworks.

Let’s check it now then!

Tools used in this episode

- Python

- ECS

- CloudFormation(again!)

- EC2

Choosing the right infrastructure-as-code tool for VPC Lattice

As an additional note, I wanted to use Terraform today, but unfortunately, when we compare the documentation for Lattice, CloudFormation seems more prepared:

Note that this is a relatively new service, so the situation may change in the coming weeks.

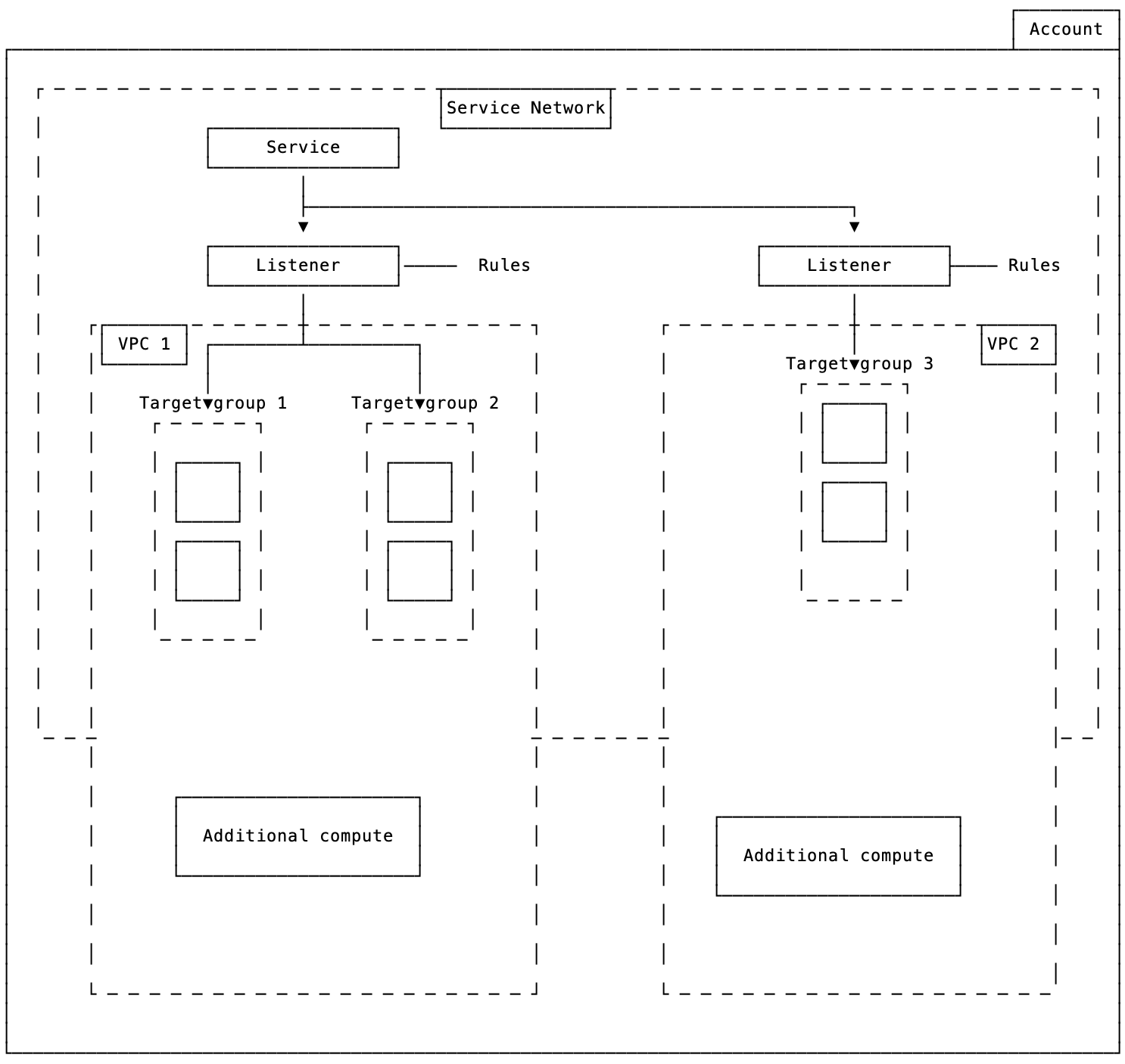

Key concepts

Here are some key concepts related to VPC Lattice:

Service - A set of objects (such as EC2 instances or ECS tasks) that act as one logical object. Importantly, it’s an independent solution, so it can be handled by a dedicated team and has logical boundaries.

Target group - A logical representation of our compute resources.

Listener - A process that checks for the possibility to connect with our resources.

Rule - A rule of routing attached to a listener.

Service network - In my understanding, this is an abstraction on top of VPCs, as it allows us to associate resources in different VPCs.

Service directory - A service discovery solution for all VPC Lattice resources.

Auth policies - Authorization policies for services inside VPC Lattice.

|

|---|

| As my personal interpretation |

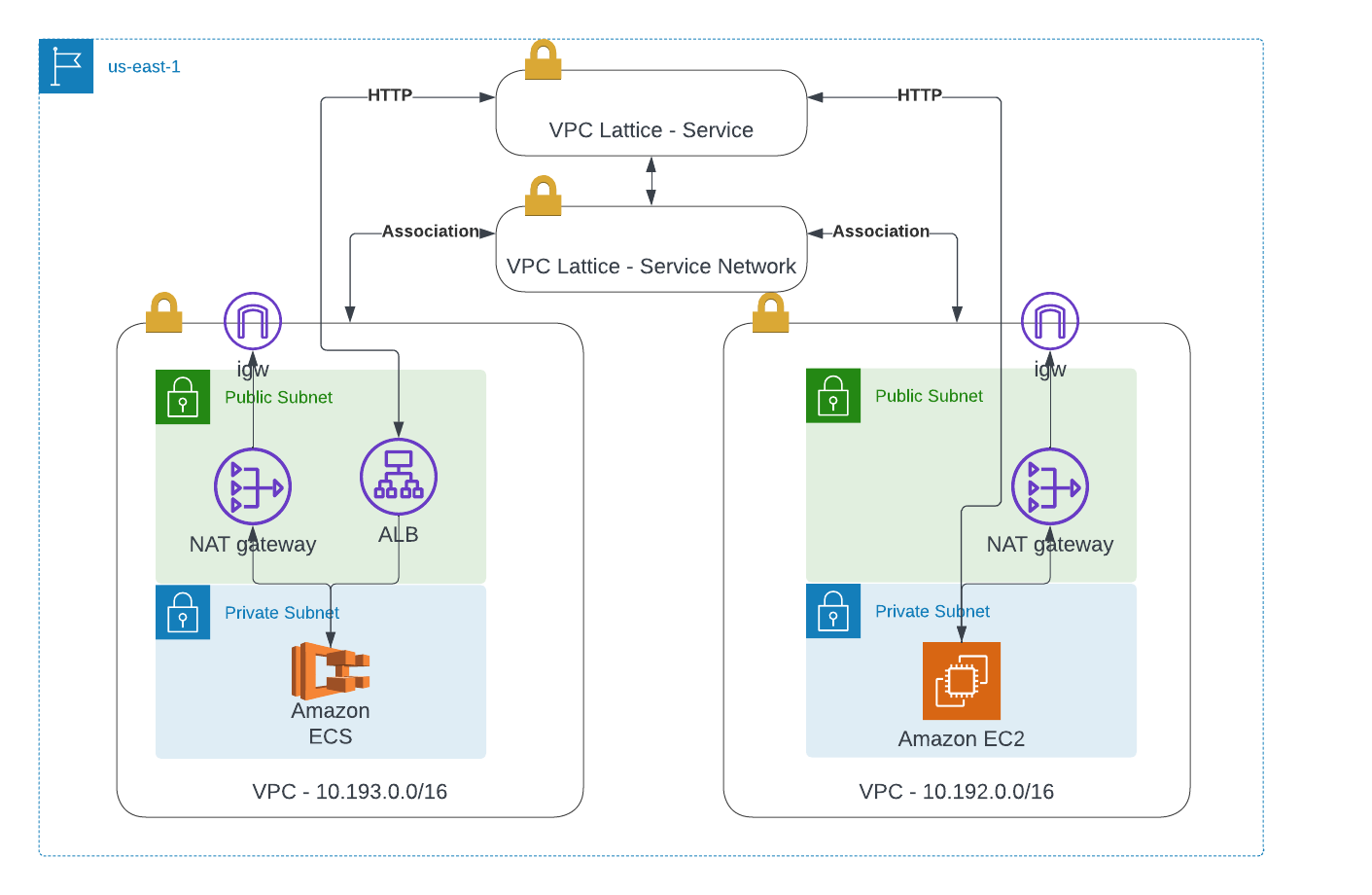

Project architecture

As you probably know, I like whole solution, or just working examples. So here as always I will try to build something usable. The plan is to have:

- EC2 with Nginx in VPC 1, private subnet with NAT gateway

- ECS sample app in VPC 2, without public LB(internal)

- VPC Lattice Service

Additionally:

- static credentials should be replaced - OICD config for GitHub Action

|

|---|

| Implemented solution |

Implementation

In the beginning, let’s focus on location. I started with eu-central-1, however after the first deployment I realized that the service it’s unavailable in Europe yet, so I switched to us-east-1.

After that, I just implemented a sample EC2-based template, next in the row was the ECS-based template. As CloudFormation commands were getting longer and longer I added a Makefile.

default: validate

REGION := "us-east-1"

validate:

@echo "Validating CloudFormation templates"

cfn-lint ec2-formation.yaml

cfn-lint ecs-formation.yaml

clean-ec2:

aws cloudformation delete-stack --stack-name lattice-ec2 --region $(REGION)

clean-ecs:

aws cloudformation delete-stack --stack-name lattice-ecs --region $(REGION)

clean-lattice:

aws cloudformation delete-stack --stack-name lattice-itself --region $(REGION)

ec2:

cfn-lint ec2-formation.yaml

aws cloudformation deploy --stack-name lattice-ec2 --template-file ec2-formation.yaml --region $(REGION) --capabilities CAPABILITY_NAMED_IAM

ecs:

cfn-lint ecs-formation.yaml

aws cloudformation deploy --stack-name lattice-ecs --template-file ecs-formation.yaml --region $(REGION) --capabilities CAPABILITY_NAMED_IAM

ecs-fastapi:

cfn-lint ecs-formation.yaml

aws cloudformation deploy --stack-name lattice-ecs --template-file ecs-formation.yaml --region $(REGION) --capabilities CAPABILITY_NAMED_IAM --parameter-overrides ContainerImage=123441.dkr.ecr.us-east-1.amazonaws.com/fastapi-repository:latest HCPath=/healtz

lattice:

aws cloudformation deploy --stack-name lattice-itself --template-file lattice-formation.yaml --region $(REGION) --capabilities CAPABILITY_NAMED_IAM

build: validate ec2 ecs lattice

clean: clean-ec2 clean-ecs clean-lattice

As you can see there is no linting on the Lattice template. The reason was simple, support it’s not ready yet. Some types are broken, also the lack of general availability is painful.

Then I added a small FastAPI code, for building container images with REST API. That leads us to CI/CD and GitHub Action. Obviously, the next step was OICD support implementation. If you are interested in topic, I can recommend some documentation:

- about-security-hardening-with-openid-connect

- configuring-openid-connect-in-amazon-web-services

- id_roles_providers_create_oidc

- d_roles_create_for-idp_oidc

If you’re lazy, here is my code:

GitHubOIDC:

Type: AWS::IAM::OIDCProvider

Properties:

Url: https://token.actions.githubusercontent.com

ThumbprintList:

- f879abce0008e4eb126e0097e46620f5aaae26ad # valid until 2023-11-07 23:59:59

ClientIdList:

- sts.amazonaws.com

Tags:

- Key: Env

Value: !Ref EnvironmentName

OIDCRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub "${AWS::StackName}-GitHub-to-${ServiceName}-role"

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Federated: !Ref GitHubOIDC

Action: sts:AssumeRoleWithWebIdentity

Condition:

ForAnyValue:StringEquals:

token.actions.githubusercontent.com:sub:

- !Sub "repo:${OrgName}/${RepoName}:ref:refs/heads/main"

- !Sub "repo:${OrgName}/${RepoName}:ref:refs/heads/dev"

token.actions.githubusercontent.com:aud: sts.amazonaws.com

ManagedPolicyArns:

- "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryPowerUser"

Tags:

- Key: Env

Value: !Ref EnvironmentName

At the beginning take a look at ThumbprintList. It’s a bit dynamic variable, based on the sha1 of the GitHub certificate. You can calculate it relatively easily according to this article. The next important thing is the policy’s condition. Especially token.actions.githubusercontent.com:sub, which should be pointed to the right repository and branch - you can use ForAnyValue here!

Seems easy right? Great. Now you need to obtain, OIDCRole’s ARN and paste it into GitHub Action secret tab. The simple action workflow could look like that:

name: Building my awesome docker image

run-name: ${{ github.actor }} on GitHub Actions 🚀

on: push

env:

AWS_REGION: "us-east-1"

permissions:

id-token: write

contents: read

jobs:

Build-FastAPI-docker:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

- name: configure aws credentials

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ secrets.AWS_ROLE_ARN }}

aws-region: ${{ env.AWS_REGION }}

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

- name: Build, tag, and push docker image to Amazon ECR

env:

REGISTRY: ${{ steps.login-ecr.outputs.registry }}

REPOSITORY: fastapi-repository

IMAGE_TAG: ${{ github.sha }}

run: |

docker build -t $REGISTRY/$REPOSITORY:$IMAGE_TAG ./ecs-api/

docker push $REGISTRY/$REPOSITORY:$IMAGE_TAG

docker tag $REGISTRY/$REPOSITORY:$IMAGE_TAG $REGISTRY/$REPOSITORY:latest

docker push $REGISTRY/$REPOSITORY:latest

After that, I started the main point of the article - VPC Lattice landscape. Implementation was funny, as It’s not a part of the Dash doc set yet, I was forced to use web docs! And that was the place were the fun began. For example:

Resource handler returned message: "ALB Target Group does not support health check config (Service:

Or information about usable AWS::VpcLattice::TargetGroup, Types was under Target, as part of ID description. Pure joy! Ah and only Internal ALB is supported, you can’t connect internal-facing, but you can connect IP of any solution in the whole universe. So the final implementation was long and rather not exciting:

AWSTemplateFormatVersion: 2010-09-09

Description: Run CFn Lattice itself

Resources:

EC2TargetGroup:

Type: AWS::VpcLattice::TargetGroup

Properties:

Name: ec2-lattice-tg

Type: INSTANCE

Config:

HealthCheck:

Enabled: true

Path: "/"

Port: 80

Protocol: HTTP

Matcher:

HttpCode: "200"

Port: 80

Protocol: HTTP

ProtocolVersion: HTTP1

VpcIdentifier: !ImportValue ec2-vpc

Targets:

- Id: !ImportValue ec2-instanceid

Port: 80

# INSTANCE | IP | LAMBDA | ALB

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

ECSTargetGroup:

Type: AWS::VpcLattice::TargetGroup

Properties:

Name: ecs-lattice-tg

# INSTANCE | IP | LAMBDA | ALB

Type: ALB

Config:

# HC not supported for ALB

#HealthCheck:

# Enabled: true

# Path: "/"

# Port: 80

# Protocol: HTTP

# Matcher:

# HttpCode: "200"

Port: 80

Protocol: HTTP

ProtocolVersion: HTTP1

VpcIdentifier: !ImportValue ecs-vpc

Targets:

- Id: !ImportValue ecs-alb-arn

Port: 80

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

GeneralListener:

Type: AWS::VpcLattice::Listener

Properties:

Name: ec2-80

Port: 80

Protocol: HTTP

ServiceIdentifier: !Ref Service

DefaultAction:

Forward:

TargetGroups:

- TargetGroupIdentifier: !Ref EC2TargetGroup

Weight: 10

- TargetGroupIdentifier: !Ref ECSTargetGroup

Weight: 10

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

ECSGeneralSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: lattice-ecs-too-open-security-group

VpcId: !ImportValue ecs-vpc

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: !ImportValue ecs-vpc-cidr

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

EC2GeneralSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: lattice-ecs-too-open-security-group

VpcId: !ImportValue ec2-vpc

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: !ImportValue ec2-vpc-cidr

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

ECSVpcAssocination:

Type: AWS::VpcLattice::ServiceNetworkVpcAssociation

Properties:

SecurityGroupIds: [!Ref ECSGeneralSecurityGroup]

ServiceNetworkIdentifier: !Ref LatticeServiceNetwork

VpcIdentifier: !ImportValue ecs-vpc

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

EC2VpcAssocination:

Type: AWS::VpcLattice::ServiceNetworkVpcAssociation

Properties:

SecurityGroupIds: [!Ref EC2GeneralSecurityGroup]

ServiceNetworkIdentifier: !Ref LatticeServiceNetwork

VpcIdentifier: !ImportValue ec2-vpc

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

LatticeServiceNetwork:

Type: AWS::VpcLattice::ServiceNetwork

Properties:

AuthType: NONE

Name: awesome-service-network

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

Service:

Type: AWS::VpcLattice::Service

Properties:

AuthType: NONE

Name: awesome-service

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

ServiceNetworkAssocination:

Type: AWS::VpcLattice::ServiceNetworkServiceAssociation

Properties:

ServiceIdentifier: !Ref Service

ServiceNetworkIdentifier: !Ref LatticeServiceNetwork

Tags:

- Key: Owner

Value: kuba

- Key: Project

Value: blogpost

As you can see, we need TargetGroup for Listener, which needs to be linked with Service. Service needs to be connected with ServiceNetwork. ServiceNetwork needs an association

with VPC, and SecurityGroup in some way.

Testing

After implementation, I was able to execute the flow:

make build

git push -f # image building

make ecs-fastapi

Next, I just get the service DNS entry, and with that, in the clipboard, I started the SSM session with my standalone instance. The result was as expected:

root@ip-10-192-20-222 bin]# curl -si awesome-service-03ce89237939acfce.7d67968.vpc-lattice-svcs.us-east-1.on.aws | grep content-type

content-type: text/html

[root@ip-10-192-20-222 bin]# curl -si awesome-service-03ce89237939acfce.7d67968.vpc-lattice-svcs.us-east-1.on.aws | grep content-type

content-type: application/json

[root@ip-10-192-20-222 bin]# curl -si awesome-service-03ce89237939acfce.7d67968.vpc-lattice-svcs.us-east-1.on.aws | grep content-type

content-type: application/json

[root@ip-10-192-20-222 bin]# curl -si awesome-service-03ce89237939acfce.7d67968.vpc-lattice-svcs.us-east-1.on.aws | grep content-type

content-type: text/html

I was able to hit the service endpoint and received two independent responses from two different sources(FastAPI responded with JSON, and Nginx with HTML). Yay!

Summary

AWS Lattice presents some exciting possibilities for programmers in the networking area. As I dived into the implementation of services, I gained confidence that its MESH-alike solution, heavily inspired Kubernetes’ approach to services.

Additionally, Lattice’s networking capabilities offer an excellent opportunity for developers to explore distributed systems and service-oriented architecture. With VPC Lattice, developers can build complex networking applications that enable real-time communication between different services, which is critical in building scalable and robust applications. Especially with distributed teams.

I’m waiting for wide service availability and documentation quality improvement. However, even with the current state, I was able to implement a working solution, so it’s not such bad. Also, use OIDC with your pipeline always when it’s possible! It’s easy, fast, and secure. Reduce credentials leak is always a good thing.

Code

As always you can find my code here: