Install Keycloak on ECS

Welcome

If you have read my latest post about accessing RHEL in the cloud, you may notice that we’re accessing the cockpit console, via SSM Session manager port forwarding. That’s not an ideal solution. I’m not talking in bed, it’s just not ideal(but cheap). Today I realised that using Amazon WorkSpaces Secure Browser could be interesting, and fun as well.

Unfortunately, this solution required an Identity Provider, which can serve us with SAML 2.0. The problem is, that most of the providers like Okta or Ping, are enterprise-oriented, and you can’t play with them easily. Of course, you can request a free trial, but it’s 30 days only, and there is no single-user account.

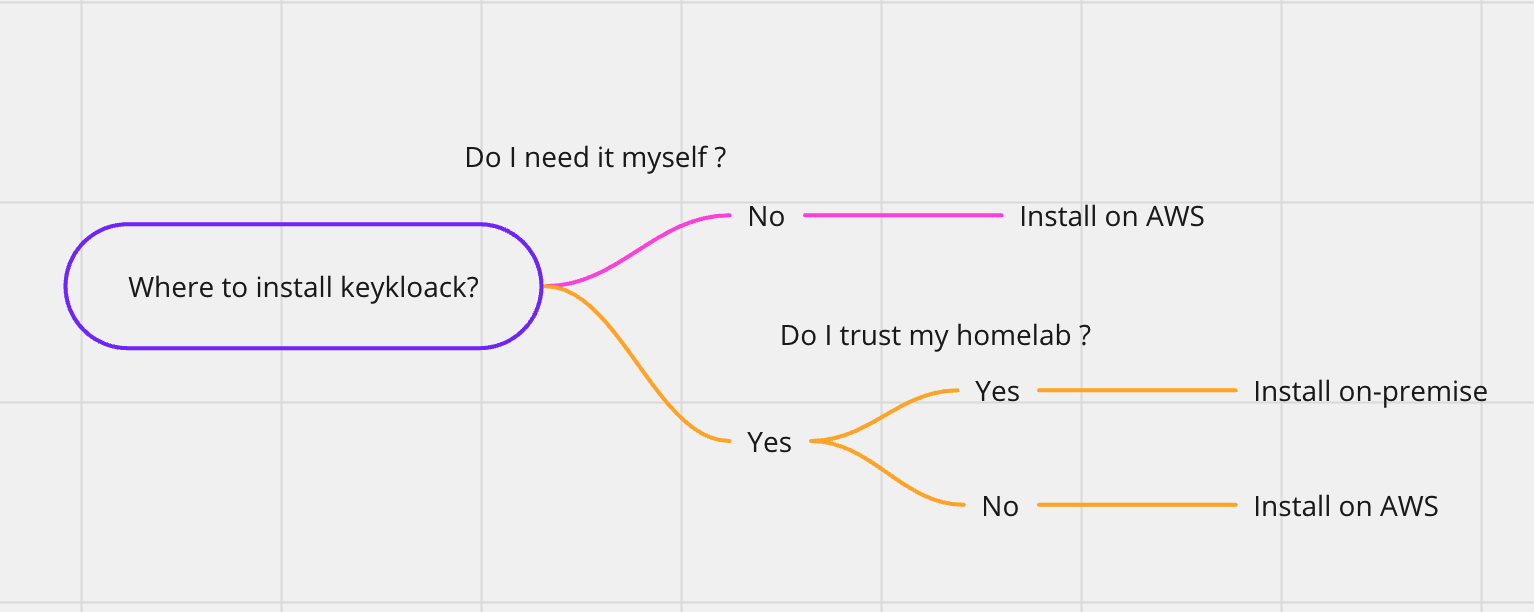

That is why I decided to use Keycloak. Then I just need to decide where to deploy it, in the cloud or in the home lab. Thankfully we have a mind map.

|

|---|

That is why, today we will install Keycloak on AWS, and in part two, we will connect it with WorkSpaces and access the RHEL console over the cockpit, as an enterprise-like user.

Keycloak intro

But hey! What is the Keycloak? In simple words it is Open Source Identity and Access Management tool.

Also, Keycloak provides user federation, strong authentication, user management, fine-grained authorization, and more. You can read more on the GitHub project page, and star it as well.

Services list

To make it a bit more interesting, I will deploy it with:

- ECS(Fargate)

- AWS Application Load Balancer

- separate database, where today we will play with Serverless Aurora in MySQL mode

- Route53 for domain management

Ok, good. Finally, we will deploy something different right?

First steps

At the beginning, I’d recommend building basic projects with networks, ECS components, database, and ALB. Let’s call it an almost dry test. Just take a look at the code, and break it into smaller parts.

import * as cdk from 'aws-cdk-lib';

import { Construct } from 'constructs';

import * as ec2 from 'aws-cdk-lib/aws-ec2';

import * as rds from 'aws-cdk-lib/aws-rds';

import * as ecs from 'aws-cdk-lib/aws-ecs';

import * as elbv2 from 'aws-cdk-lib/aws-elasticloadbalancingv2'

On top, as you can see, I have imports. It’s worth to mention,

that AWS Aurora Serverless V2 is using aws-rds package, and modern load balancers,

like ALB is included in aws-elasticloadbalancingv2, not standard aws-elasticloadbalancing.

Firstly we have a network. Today we will have regular 3-tier architecture,

with a really small mask - /28, which means 11 IPs; regular 16 - 5 (AWS specific).

Besides of that two AZs for Aurora HA, so 2 NAT Gateways as well.

const vpc = new ec2.Vpc(this, 'VPC', {

ipAddresses: ec2.IpAddresses.cidr("10.192.0.0/20"),

maxAzs: 2,

enableDnsHostnames: true,

enableDnsSupport: true,

restrictDefaultSecurityGroup: true,

subnetConfiguration: [

{

cidrMask: 28,

name: "public",

subnetType: ec2.SubnetType.PUBLIC

}, {

cidrMask: 28,

name: "private",

subnetType: ec2.SubnetType.PRIVATE_WITH_EGRESS

}, {

cidrMask: 28,

name: "database",

subnetType: ec2.SubnetType.PRIVATE_ISOLATED

}

]

});

Next we very simple setup of the application load balancer. It’s listed on port 80(no TLS), it’s internet facing, and exists in our VPC, inside subnets with type “PUBLIC”.

const alb = new elbv2.ApplicationLoadBalancer(this, 'ApplicationLB', {

vpc: vpc,

vpcSubnets: {

subnetType: ec2.SubnetType.PUBLIC,

},

internetFacing: true

});

const listener = alb.addListener('Listener', {

port: 80,

open: true,

});

});

Here the same, we have a very basic setup of Postgres Aurora, without security groups or deletion protection.

However, we’re setting it in the correct set of subnets,

and what is more important we’re checking our Postgres engine version,

as the latest is not always supported. If you would like to check it

on our own, here is the command, which shows you available engines.

aws rds describe-db-engine-versions \

--engine aurora-mysql \

--filters Name=engine-mode,Values=serverless

BUT, if you would like to use Aurora Serverless V2, you need to use rds.DatabaseCluster,

so our code will be, and as you can see we can use a higher version of Postgres:

new rds.DatabaseCluster(this, 'AuroraCluster', {

engine: rds.DatabaseClusterEngine.auroraMysql({

version: rds.AuroraMysqlEngineVersion.VER_2_11_4

}),

vpc: vpc,

credentials: {

username: 'keycloak',

// WARNING: This is wrong, do not work this way

password: cdk.SecretValue.unsafePlainText('password')

},

// NOTE: use this rather for testing

deletionProtection: false,

securityGroups: [

auroraSecurityGroup

],

vpcSubnets: {

subnetType: ec2.SubnetType.PRIVATE_ISOLATED

},

writer: rds.ClusterInstance.serverlessV2('ClusterInstance', {

scaleWithWriter: true

}),

});

Great, now we can configure:

- ECS Cluster with Fargate Capacity Providers

- Fargate Task Definition

- container

- ECS Service

- ALB TargetGroup registration

and check if we will be able to access our container from the regular Internet.

const ecsCluster = new ecs.Cluster(this, 'EcsCluster', {

clusterName: 'keycloak-ecs-cluster',

// NOTE: add some logging

containerInsights: true,

enableFargateCapacityProviders: true,

vpc: vpc

})

const ecsTaskDefinition = new ecs.FargateTaskDefinition(

this,

'TaskDefinition',

{

memoryLimitMiB: 512,

cpu: 256,

runtimePlatform: {

operatingSystemFamily: ecs.OperatingSystemFamily.LINUX,

cpuArchitecture: ecs.CpuArchitecture.X86_64

}

});

const container = ecsTaskDefinition.addContainer('keycloak', {

image: ecs.ContainerImage.fromRegistry('nginx'),

portMappings: [

{

containerPort: 80,

protocol: ecs.Protocol.TCP

}

]

});

const ecsService = new ecs.FargateService(this, 'EcsService', {

cluster: ecsCluster,

taskDefinition: ecsTaskDefinition,

vpcSubnets: {

subnetType: ec2.SubnetType.PRIVATE_WITH_EGRESS

}

});

ecsService.registerLoadBalancerTargets({

containerName: 'keycloak',

containerPort: 80,

newTargetGroupId: 'ECS',

listener: ecs.ListenerConfig.applicationListener(

listener,

{ protocol: elbv2.ApplicationProtocol.HTTP })

},);

As you see the logic here is straightforward.

First, we need a cluster, with enabled FargateCapacityProvider,

and we would like to place it in our VPC.

Then we have a task, that needs to be an instance of class ecs.FargateTaskDefinition.

Next, we’re adding a container object with a Nginx docker image and port mapping.

Additionally, we need a service that combines all elements.

At the end, we register our service, as Load Balancer Target.

This configuration should allow us to validate our application skeleton, and make sure that basic components are up, and we don’t mess something. That is how I like to work, make a simple landscape, then tweak it according to the needs.

Keycloak configuration

Now, we need to clarify a few things. First, as we’re planning to run Keycloak in container, that is why we need to go through this doc, then as we’re using Aurora, we need this.

Second, there is no support for IAM Roles, which is why we need to use a username/password. However, if you feel stronger than me with AWS SDK for Java, and have some time, I’m sure that the Keycloak team will be more than happy to review your PR.

So after reading the documentation, looks like I need to add the JDBC driver to the image, which requires building a custom image. It will be very simple but require some project modification. Additionally, we need some environment variables:

const container = ecsTaskDefinition.addContainer('keycloak', {

image: ecs.ContainerImage.fromRegistry('quay.io/keycloak/keycloak:24.0'),

environment: {

KEYCLOAK_ADMIN: "admin",

KEYCLOAK_ADMIN_PASSWORD: "admin",

KC_DB: "postgres",

KC_DB_USERNAME: "keycloak",

KC_DB_PASSWORD: "password",

KC_DB_SCHEMA: "public",

KC_LOG_LEVEL: "INFO, org.infinispan: DEBUG, org.jgroups: DEBUG",

},

portMappings: [

{

containerPort: 80,

protocol: ecs.Protocol.TCP

},

],

});

Stop. Why it doesn’t work?

Look good right? No, no, and no! Making this project work forced me to spend much more time than I expected. Why? That’s simple, Keycloak is complex software, which is why a lot of configuration is needed. However, let’s start from the beginning.

Building custom docker image

I decided, that a public docker image from Aurora support would be great, and maybe helpful for someone. That is why you can find it on quay. Also whole project repo is public, and accessible here. Besides GitHub Actions, Dockerfile is the most important part, so here we go:

ARG VERSION=25.0.1

ARG BUILD_DATE=today

FROM quay.io/keycloak/keycloak:${VERSION} as builder

LABEL vendor="3sky.dev" \

maintainer="Kuba Wolynko <kuba@3sky.dev>" \

name="Keyclock for Aurora usage" \

arch="x86" \

build-date=${BUILD_DATE}

# Enable health and metrics support

ENV KC_HEALTH_ENABLED=true

ENV KC_METRICS_ENABLED=true

ENV KC_DB=postgres

ENV KC_DB_DRIVER=software.amazon.jdbc.Driver

WORKDIR /opt/keycloak

# use ALB on top, self-sign is fine here

RUN keytool -genkeypair \

-storepass password \

-storetype PKCS12 \

-keyalg RSA \

-keysize 2048 \

-dname "CN=server" \

-alias server \

-ext "SAN:c=DNS:localhost,IP:127.0.0.1" \

-keystore conf/server.keystore

ADD --chmod=0666 https://github.com/awslabs/aws-advanced-jdbc-wrapper/releases/download/2.3.6/aws-advanced-jdbc-wrapper-2.3.6.jar /opt/keycloak/providers/aws-advanced-jdbc-wrapper.jar

RUN /opt/keycloak/bin/kc.sh build

FROM quay.io/keycloak/keycloak:${VERSION}

COPY --from=builder /opt/keycloak/ /opt/keycloak/

ENTRYPOINT ["/opt/keycloak/bin/kc.sh"]

Worth mentioning is the fact, that the build command needs to be

executed after getting aws-advanced-jdbc-wrapper,

or enabling health checks. Also, I’m using a self-sign certificate

as AWS will provide public cert on the ALB level.

Documentation

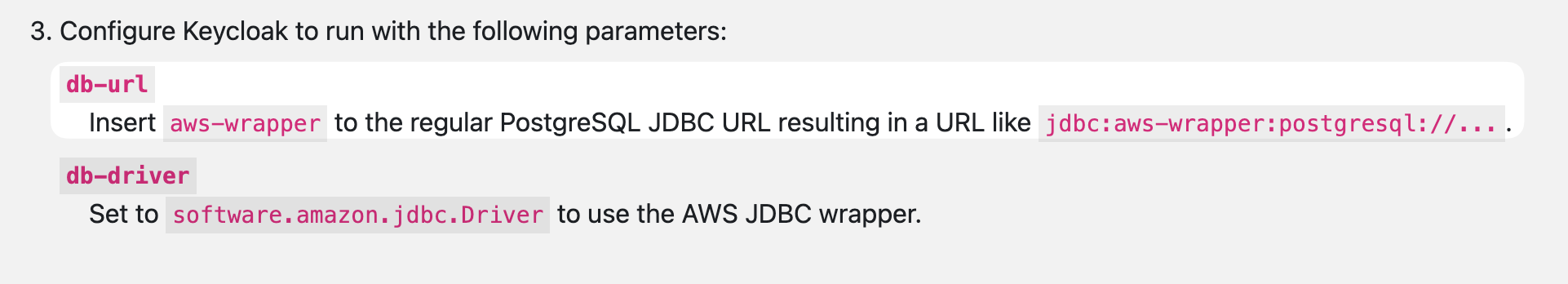

When you are going through official Keycloak documentation you will be able to read something like this:

|

|---|

I’m not a native speaker, so for me, it means:

Put

aws-wrapper into your JDBC URL. But, it’s not true.It means that the result will be

jdbc:aws-wrapper:postgresql://,which is not what the application can consume!

To work with Aurora Postgresql, our connection string must be:

Edit: I was wrong. aws_wrapper needs to be included in JDBC URL,

to use advanced wrapper’s functions. However environment variable KC_DB_DRIVER=software.amazon.jdbc.Driver,

need to be present in Dockerfile before running /opt/keycloak/bin/kc.sh build command.

KC_DB_URL: 'jdbc:aws-wrapper:postgresql://' + theAurora.clusterEndpoint.hostname + ':5432/keycloak',

Note: keycloak at the end is the database name, and can be customized.

Believe it or not, this misunderstanding costs me around the week of debugging…

Cookie not found

When after a while I was able to start an app and type admin/admin, I was welcomed with this error message:

Cookie not found. Please make sure cookies are enabled in your browser.

After some investigation, and reading stackoverflow threads, the decision was to add TLS and see what would happen. To do that in rather a simple manner, a hosted zone with a public registered domain was needed. And you can read about it setting it up here. In case of adding it to project we can simply write:

const zone = route53.HostedZone.fromLookup(this, 'Zone', {domainName: DOMAIN_NAME});

new route53.CnameRecord(this, 'cnameForAlb', {

recordName: 'sso',

zone: zone,

domainName: alb.loadBalancerDnsName,

ttl: cdk.Duration.minutes(1)

});

const albcert = new acme.Certificate(this, 'Certificate', {

domainName: 'sso.' + DOMAIN_NAME,

certificateName: 'Testing keyclock service', // Optionally provide an certificate name

validation: acme.CertificateValidation.fromDns(zone)

});

this.listener = alb.addListener('Listener', {

port: 443,

open: true,

certificates: [albcert]

});

Health check

This one is tricky. The target Group attached to the Application Load Balancer requires healthy targets. Using the native ECS method as described above does not meet our needs, as we expected:

- dedicated path

/health - different port(9000), than main container port (8433)

- if we decide to stick with

/path, we need to accept port302

As you see we need to change a method to a more traditional one:

theListner.addTargets('ECS', {

port: 8443,

targets: [ecsService],

healthCheck: {

port: '9000',

path: '/health',

interval: cdk.Duration.seconds(30),

timeout: cdk.Duration.seconds(5),

healthyHttpCodes: '200'

}

});

NOTE: default HC port could be changed, but it adds additional complexity.

Build time

Playing with ECS and database in the same CDK stack is not the best possible idea. Why? Let’s imagine the situation when your health checks are failing. Deployment is in progress and still rolling. During this period, you can’t change your stack. But you can delete it…, and recreate it. Even if the networking part is fast, spanning Aurora up and down, could add to our change time by around 12 minutes. That’s not a bed, but still, it’s quite easy to avoid it. The change was simple. I created a new stack, dedicated to ECS, and split the app into two parts:

- infra (network and database)

- ECS (container and services)

This simple action shows that the modularity of Infrastructure as Code could be more important than we’re usually thinking.

Final declaration

Slowly going to the end, the ECS dedicated part will look like that:

ecsTaskDefinition.addContainer('keycloak', {

image: ecs.ContainerImage.fromRegistry(CUSTOM_IMAGE),

environment: {

KEYCLOAK_ADMIN: 'admin',

KEYCLOAK_ADMIN_PASSWORD: 'admin',

KC_DB_USERNAME: 'keycloak',

KC_DB_PASSWORD: theSecret.secretValueFromJson('password').toString(),

KC_HEALTH_ENABLED: 'true',

KC_HOSTNAME_STRICT: 'false',

KC_DB: 'postgres',

KC_DB_URL: 'jdbc:aws-wrapper:postgresql://' + theAurora.clusterEndpoint.hostname + ':5432/keycloak'

},

portMappings: [

{

containerPort: 8443,

protocol: ecs.Protocol.TCP

}, {

containerPort: 9000,

protocol: ecs.Protocol.TCP

}

],

logging: new ecs.AwsLogDriver(

{streamPrefix: 'keycloak'}

),

command: ['start', '--optimized']

});

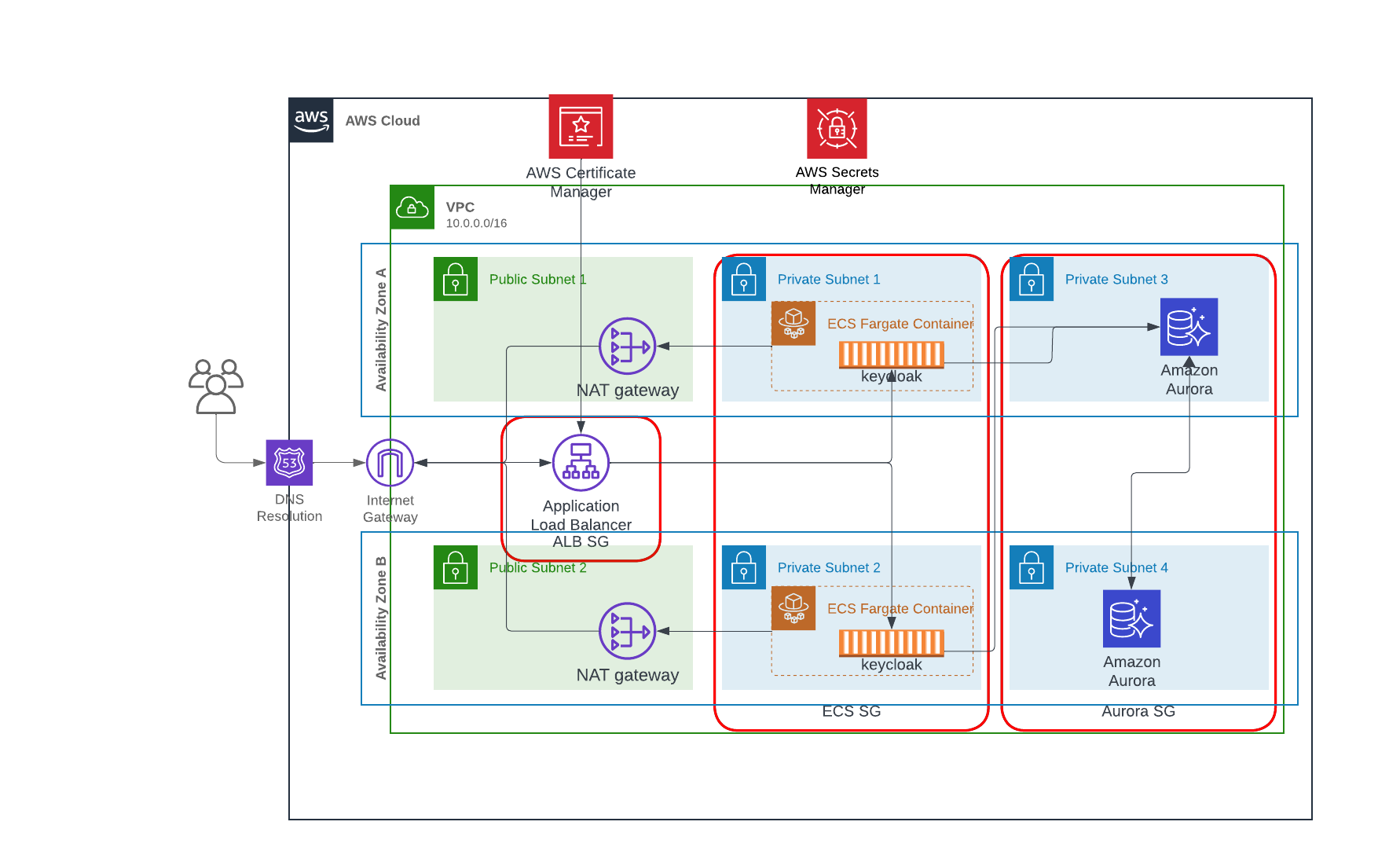

Final architecture

As we all love images, that’s the final digram of our setup:

|

|---|

Summary

That’s the end of setting keycloak on ECS with the usage of Aurora Postgres. I didn’t see it anywhere on the Internet, so for at least a moment, that is the only working example and blog post available publicly. Additionally, a solution was tested with versions 24.0 and the latest 25.0.1 of Keycloak base images. What will be next? As I mentioned at the beginning. Setting up Worksapces with my own SSO solution, however, firstly we need to configure Keyclock, which I’m almost sure, will be fun as well. Ah and to be fully open, source code could be found on GitHub.